Are We Forgetting Humans When We Design AI Products?

Why AI-driven product decisions can weaken user-centered design, and the hidden risk of over-automation in AI product design.

As product designers, we’re trained to balance UX, business goals, and technical constraints together.

AI products promise speed, scale, and intelligence. In AI-driven products, that balance shifts. The questions we ask, and the trade-offs we make, change in ways that feel unfamiliar.

Designing AI products feels fundamentally different from traditional UX work.

Decisions are no longer about clarity or usability alone, they are shaped by model behavior, system constraints, and automation pressure.

Designing for AI no longer starts with “What’s the right experience?”

It often starts with “Who is this decision really serving?”

When Design Decisions Start to Feel Different

As product designers, we are used to working at the intersection of UX, business goals, and technology.

We collaborate, align, and negotiate trade-offs, but usually within a system that behaves predictably.

Designing for AI changes this completely.

In AI product development, the system is no longer fully deterministic. Outputs vary. Behaviors evolve. And because of this, our design priorities constantly shift.

During my recent work on AI-driven products, I keep coming back to the same questions:

Are we designing for consistent UI, or for system constraints?

Do we aim for a human feeling, or do we intentionally show that “AI is thinking”?

Should the user feel in control, or should the model feel authoritative?

Are we sacrificing UX because of technical limitations, or shaping UX within those limitations?

Are we testing the product flow, or are we testing the model itself?

Are we optimizing for people, or for systems?

And maybe the hardest question of all:

Is the human still the decision-maker — or just a data point in the loop?

When the Question Is No Longer “What’s Right?”

In AI products, decisions rarely revolve around what is objectively “correct.”

Instead, they revolve around who the decision serves.

This becomes especially visible in testing.

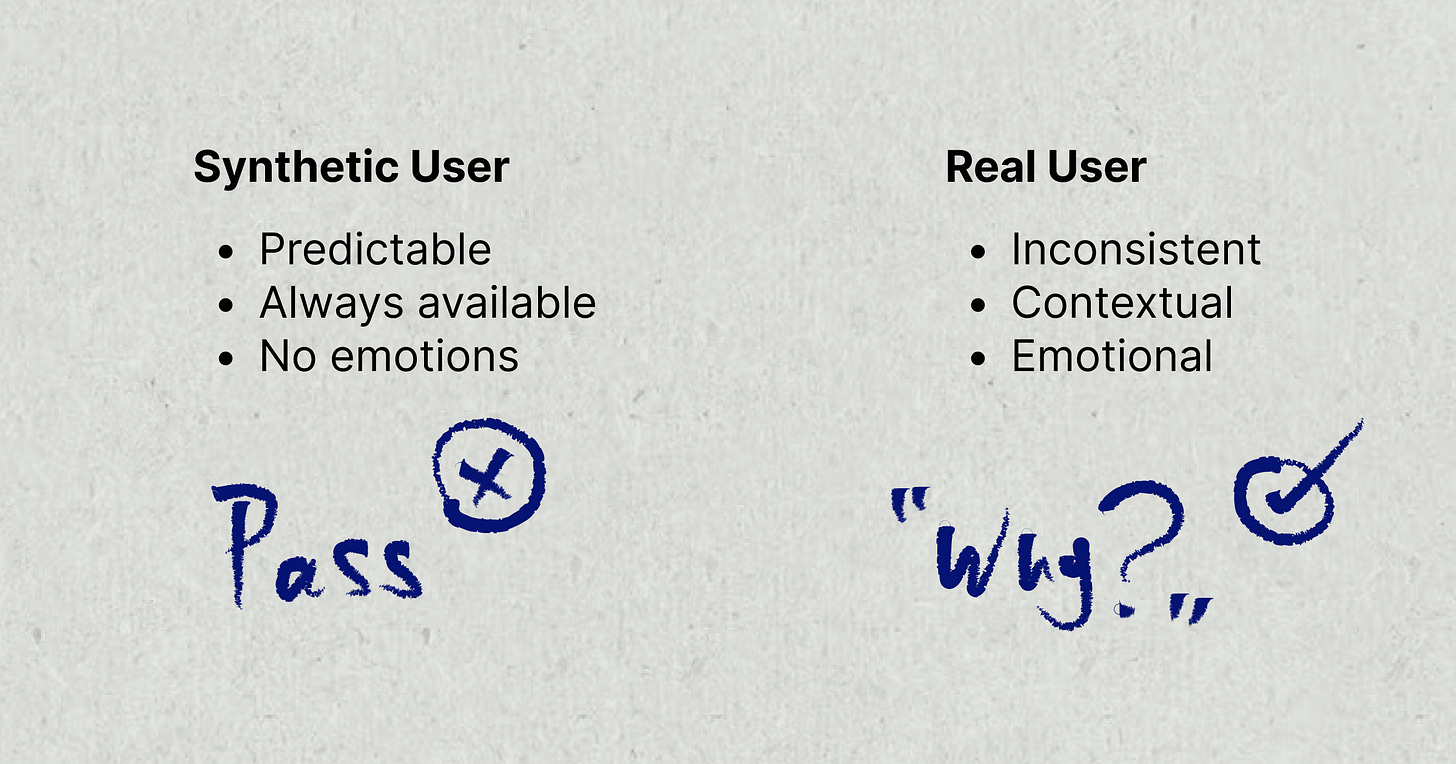

Recently, in an AI design project, the product was already live, yet still required extensive testing. Some team members strongly suggested introducing an AI agent to automatically test scenarios at scale.

From a system perspective, it made sense.

AI-Based Testing:

Fast

Cheap

Scalable

Model-centric

But as a product designer, this proposal stopped me every time.

Who is the user of this product?

Whose needs are we solving?

Who benefits from this system behaving “correctly”?

These are not philosophical questions.

They are the foundation of product design.

If we move entirely toward AI-driven testing, where does emotional design live?

How do we understand follow-up needs, edge cases, hesitation, trust, or confusion?

Can an automated agent ever reveal how a product fits into real life problem-solving?

Testing with Real Users:

Slow

Fragmented

Expensive

Human-centric

This tension led me to revisit a concept that keeps appearing in AI discussions:

Human-in-the-Loop vs Human-out-of-the-Loop systems.1

Human-out-of-the-Loop systems operate fully autonomously, with little or no human oversight. They promise efficiency, but often raise serious concerns around accountability, transparency, and bias.

Human-in-the-Loop systems intentionally keep humans involved in decision-making, validation, and oversight, not as a fallback, but as a core part of the system.

This topic came up again at a design event I attended called “UX Meets AI: A Love Story with Red Flags.”

One question stayed with me:

“Aren’t synthetic user tests enough for our research process?”

It was striking how common this concern was, not just in my own team, but across the design community.

It made me wonder: ’’Are we slowly forgetting about real users?’’

With the AI hype growing stronger every day, are humans still decision-makers, or have they become supervisors, validators, or worse… just training data?

The Core Conflict

Here’s the tension I see clearly now:

AI-driven tests measure product behavior

Real user tests measure product meaning

And meaning cannot be automated away.

No matter how advanced our systems become,

quantity will never replace quality.

Let’s be honest:

Conversation ≠ value

Engagement ≠ usefulness

High interaction does not mean the product solves a real problem.

And automation does not automatically create trust.

In fact, excessive automation in product development often increases risk, not safety.

The more we disconnect from real users, the weaker our user-centered value becomes.

Final Thought

Designing for AI is not about choosing between humans and systems. It’s about remembering who the system is ultimately for.

And I wonder, where do you draw the line between automation and human judgment in AI products?

Human in the Loop AI. (n.d.). Evozon. https://www.evozon.com/glossary/ai/what-is-the-difference-between-human-in-the-loop-and-human-out-of-the-loop/#:~:text=Human%2Dout%2Dof%2Dthe,issues%20are%20addressed%20and%20mitigated.